LZiaB Design Overview

Page Contents

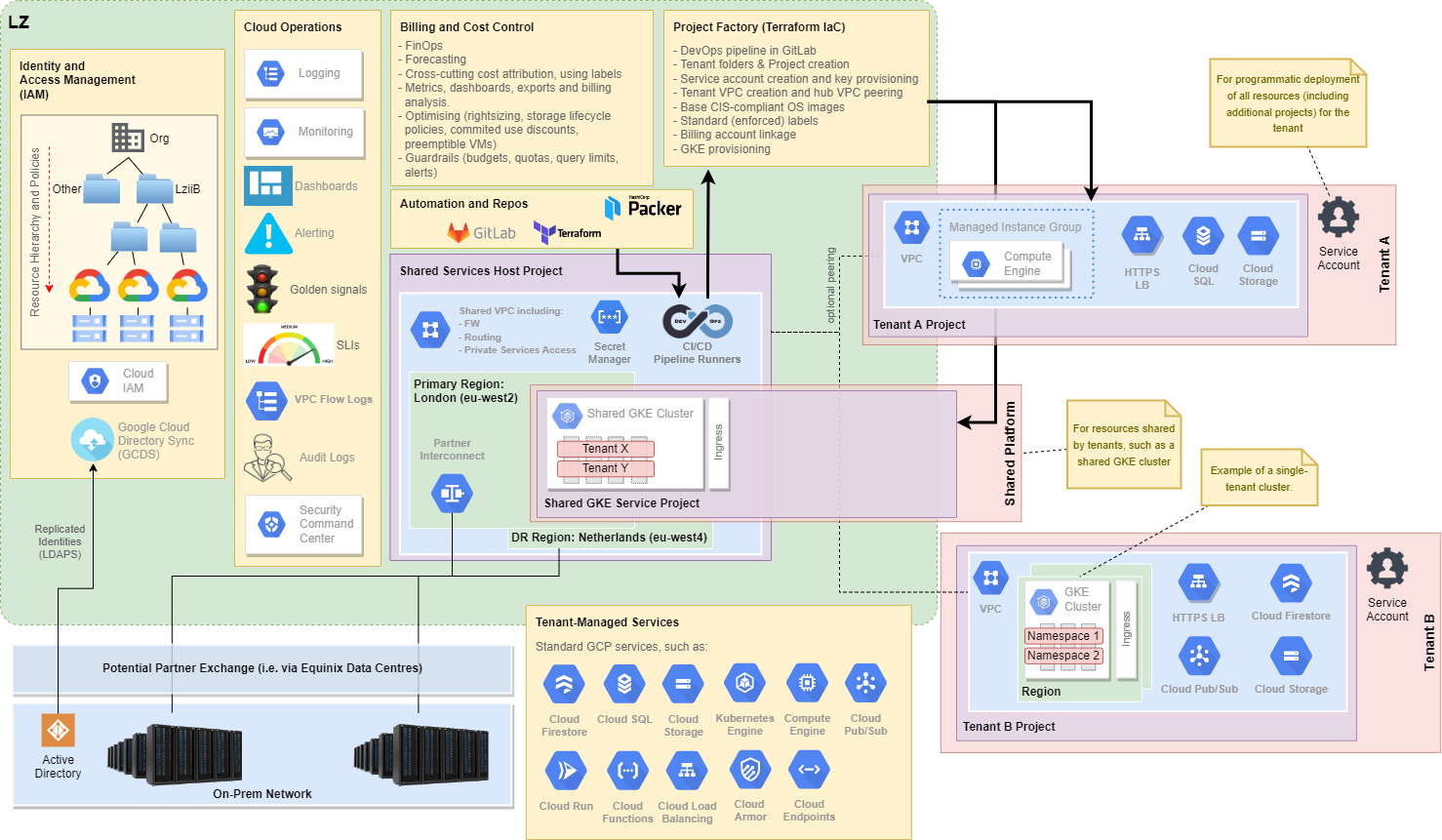

Design Overview

Organisation Resource Hierarchy

The LZiaB organisation hierarchy looks like this:

This allows management and security policies to be applied top down at any level in the hierarchy.

Notes on this hierarchy:

- The standard naming convention for a project is

{org}-ecp-{tier}-{tenant}-{project_name} - The

tiercan be one ofprod,Non-PRD= i.e. any non-production environment, orsbox= sandbox - Ultimately, all resources in GCP are deployed into Google Cloud

projects.- The project is the basic unit of organisation of GCP resources.

- Resources belong to one and only one project.

- Projects provide trust and billing boundaries.

- The

tenantrepresents a given consumer of the platform.- A tenant is isolated from other tenants on the platform.

- A tenant has significant autonomy to deploy their own projects, resources and applicatons, within their own tenancy folders.

- “With great power comes great responsibility." The tenant is responsible for the resources they deploy within their own pojects.

- A tenant will typically have tenant folders in both

ProdandNon-PRDfolders, andSboxif required.

Identity and Access Management

- LZiaB resources may only be managed by Google Workspace or Google Cloud Identities, which are tied to the

some-org.comdomain. - Users are mastered on-premise in Active Directory, and synchronised from AD to Google Cloud using one-way Google Cloud Directory Sync (GCDS).

- Identities may be users, groups, the entire domain, or service accounts.

- Typically, access to resources is not granted to individual accounts, but to groups. Groups in LZiaB will be named using this naming convention:

_gcp-{division}-{platform}-{tier}-{tenant}-{functional_role} - User identities are authenticated to Google Cloud using PingIdentity SSO with multi-factor authentication.

- Service accounts are associated with a given application. They allow applications and certain GCP services (e.g. GCE instances) to access other GCP services and APIs. Organisational security policies will be enforced to restrict use of security accounts, and to - for example - prevent creation and sharing of service account keys.

- Note that provisioning of all resources in all tenant projects (outside of

Sbox) may only be performed using a dedicated service account, created by the automated project factory. This is how we enforce our automation principle.

- Typically, access to resources is not granted to individual accounts, but to groups. Groups in LZiaB will be named using this naming convention:

- Identities are given access to cloud resources by assigning roles. A role is a collection of permissions. To ensure the principle of least privilege, we will mandate use of granular roles with only appropriate permissions allocated. This is controlled through security policy.

Networking

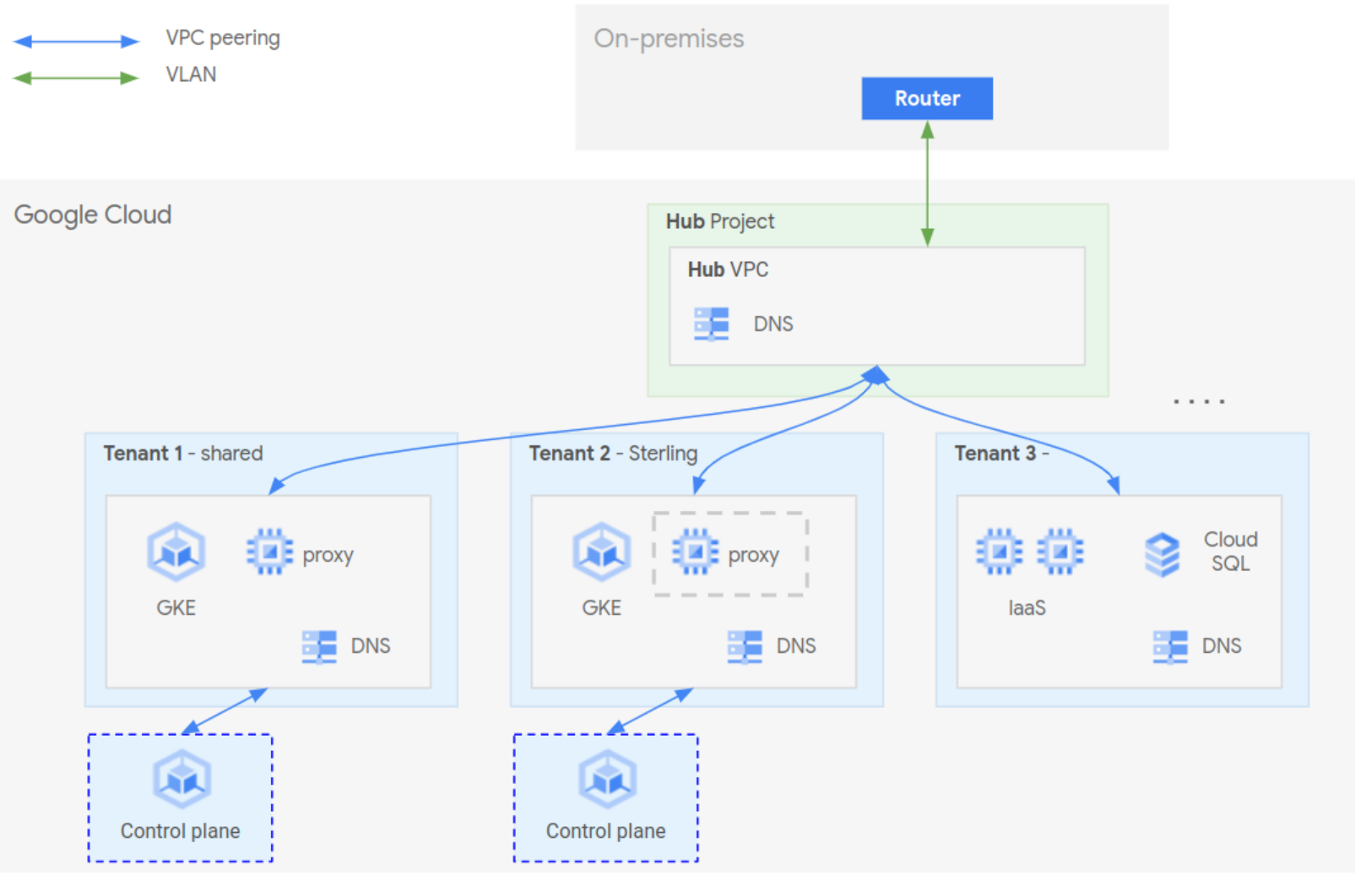

LZiaB uses a hub-and-spoke network architecture:

- A common VPC network acts as the hub, and hosts centralised networking and security resources. This includes private IP connectivity, via the SLA-backed, high-bandwidth / low-latency Interconnect, to the on-premises network.

- Tenant projects will have their own spoke VPC network. Thus, tenants have full automonmy and control over resources deployed within their own VPC.

- Tenants may be peered to the hub shared VPC network. This is how tenants can:

- Obtain private connectivity to the on-premises network (if appropriate).

- By funnelled through centralised security tooling when accessing other networks (if appropriate).

- There is expected to be a shared tenant VPC to host, for example, a shared GKE cluster, for those Tenant’s who have no specific network requirements.

- DNS peering will allow Google Cloud resources to perform DNS resolution against on-premises infrastructure, e.g. such that we can resolve hostnames in the

some-org.comdomain.

Conceptually, it looks like this:

The Hub-and-Spoke design exists in Production, and is replicated in Non-Production. Thus, there are actually two hubs, each with a common VPC, and each providing Interconnect-based connectivity to the on-premises network.

Regions and Zones

Regions are independent geographic areas (typically sub-continents), made up of multiple zones.

Zones are effectively data centre campuses, with each zone separated by a few km at most. A zone can be considered a single independent failure domain within a given region.

High-availability is achieved by deploying resources across more than one zone within a region. Consequently, production resources in LZiaB should generally be deployed using regional resources, rather than zonal, where possible.

However, deployment across multiple zones within a single region is insufficient to safeguard against a major geographic disaster event. For this reason, any application that requires diaster recovery capability will need to be deployable across two separate regions. To support this requirement, LZiaB has connectivity to on-premises out of two separate regions:

- Primary region: London (

europe-west2) - Standby region: Netherlands (

europe-west4)

Outbound Internet Connectivity

Outbound connectivity from Google resources to the Internet is routed through our organisation’s Zscaler VPN. This allows centralised application of security controls.

Inbound Connectivity from the Internet

Google Compute Engine (GCE) instances will only be given private IP addresses; they will never be given external IP addresses. Consequently, these instances are not directly reachable from any external network; this includes from the Internet.

Consequently, if external access is required to a LZiaB-hosted service, this must be achieved via a load balancer. Routing inbound traffic through a load balancer means:

- The load balancer acts as a reverse proxy; external clients only see the public IP address of the load balancer.

- Internal resources do not need external IP addresses.

- Layer 7 firewalling (WAF) can be applied at the load balancer using Cloud Armor. This products mitigates a number of OWASP threats out-of-the-box.

- Identity-level access control can be applied on an application basis, using the Cloud Identity-Aware Proxy (IAP).

Access to Google APIs and Services

Google Private Access is enabled on all LZiaB VPC networks. This allows instances with no external IP address to connect to Google APIs and services, such as BigQuery, Container Registry, Cloud Datastore, Cloud Storage, and Cloud Pub/Sub.

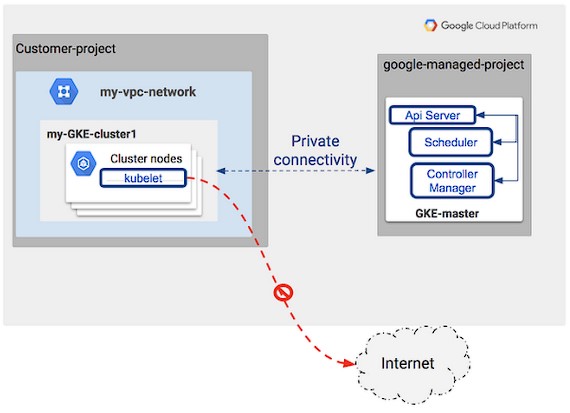

GKE Control Plane Connectivity

Connectivity between GKE cluster “worker” nodes and the cluster’s control plane will be achieved using VPC network peering.

Security Overview

- To adhere to the principle of least privilege, Google IAM is used to assign curated and custom roles to Google groups.

- Roles are granted only to groups; not to user.

- Basic (aka _primitive) roles are disallowed; they do not provide sufficient granularity.

- Any changes to roles, groups or IAM policy is audited.

- Audit logs exposed using the Security Command Centre.

- A number of organisation security policies are applied top down. These restrict the ability to perform some actions. For example, some of the policies applied ensure that:

- Public (external) IP addresses cannot be assigned to compute instances.

- Public IP addresses cannot be assigned to Cloud SQL instances.

- Default VPC networks can not be created. Thus, tenants may only create custom networks, in specified regions.

- Service account keys may not be created or uploaded.

- OS Login is enforced, ensuring that SSH access to instances is done using IAM-controlled Google identities, with secure Google-management of SSH keys. This circumvents the need to store and manage SSH keys independent of the instances.

- Secure access to private resources (such as GCE instances or Kubernetes control plane) will be done using a secure bastion host pattern, which utilises OS Login and the Identity-Aware Proxy, such that only authorised users can connect. For example, where there is a need for a tenant to administer any instances using SSH, this is the pattern you will be used.

- External HTTPS access to internal resources is only possible via the HTTPS load balancer. This ensures external clients have no visibility of internal IP addresses.

- Connectivity to the Internet is routed through the Zscaler Internet Access gateway.

- Additionally, web application firewall rules and allow-lists can be applied at the LB, using Google Cloud Armor.

- Google Compute Engine (GCE) instances will only be built using shielded VMs using the Centre for Internet Security (CIS)-compliant VM images. Off-the-shelf Google images that offer shielded CIS-compliant variants can be seen here

- GCE instances (including GKE nodes) are kept current through the automatic update of underlying images, and automatic recreation of instances to use new images.

- Google Kubernetes Engine (GKE) clusters will always be private, and thus will only be accessible using internal private IP addresses. Additionally, the control plane will only be exposed using a private endpoint.

- GKE clusters are hardened:

- Basic (username/password) authentication is not permitted.

- Network policies limit connectivity between namespaces in the cluster. Exceptions must be explicitly configured. This limits lateral movement within a Kubernetes cluster.

- Workload identity ensures granular control of access to Google services and APIs.

- Cloud IAM and Kubernetes Role Based Access Controls (RBAC) are used to control access to Kubernetes objects.

- Google Security Command Centre (SCC) provides a single centralised platform for aggregating and viewing security information. This includes:

- Automatic detection of threats, vulnerabilities, misconfigurations, and drift from secure configurations.

- Anomaly detection, e.g. for detection of behaviour that might signal malicious activity.

- Web security scanning

- Container threat detection

- Reporting of potential sensitive and PII data.

- Reporting of VPC service controls.

- Compliance management.

- Actionable security recommendations.