Your Tenancy

Page Contents

- Tenant Factory Overview

- Tenant Folders and Projects

- Default Tenant Groups

- Tenant Networking

- Service Account

- Google Kubernetes Engine (GKE) Clusters

- Your GitLab and Infrastructure Code

- Creating More Projects

- Accessing Your Internal Resources

Tenant Factory Overview

Following your tenancy request, you will be established in LZiaB as a new tenant. This approaches allows you - as a tenant - to be responsible for deploying and managing your own resources within your Google projects. Thus increases your agility, and reduces dependency on the central Cloud Platform Team.

The automated tenant factory will generate a set of resources for you, in the LZiaB environment. This includes:

- Your tenant folder in the Google resource hiearchy.

- Your Prod, Non-Prod (Flex) and Sandbox folders.

- An initial spoke VPC network in each of Prod and Flex.

- Peering of your spoke networks to the appropriate Hub network.

- An optional bastion host in each spoke VPC, secured with the Identity-Aware Proxy (IAP).

- An initial monitoring dashboard, which can be enhanced to your needs.

- Service accounts against each of the Prod and Flex folders, with required permissions to deploy resources under these folders.

- Tenant IAM groups with appropriate roles attached.

- A project factory code example - i.e. Terraform IaC - to help tenants create additional projects in their tenancy.

- Fabric module examples - i.e. Terraform IaC - to help tenants get started in deploying resources to their projects.

Tenant Folders and Projects

As a new tenant, folders will be created for you in each of the Prod, Flex, and Sandbox folders. Under each of these, an initial project will be created for you. You will be able to create more projects with your service account, in your tenancy.

Projects will be named according to the following naming standard:

{org}-ecp-{tier}-{tenant}-{project_name}

{tier} is one of prod, npd, or sbox.

As an example, your initial project name might look like this:

{org}-ecp-prod-pdp-app_foo

{org}-ecp-sbox-selling-app_bar

{org}-ecp-npd-ordering-sterling_1

Default Tenant Groups

New tenants will be given a default set of groups, with appropriate roles for their project(s). The tenant factory will assign these groups to your tenancy.

| Group Name | Access to |

|---|---|

| _gcp-{org}-ecp-<tenant>-admin | Admin access for all projects in your tenancy |

| _gcp-{org}-ecp-prod-<tenant>-viewer | View access for your Prod tenant hierarchy |

| _gcp-{org}-ecp-npd-<tenant>-viewer | View access for your Non-Prod (Npd) tenant hierarchy |

| _gcp-{org}-ecp-prod-<tenant>-support | Support access (including logging and monitoring access) for your Prod tenant hierarchy |

| _gcp-{org}-ecp-npd-<tenant>-support | Support access (including logging and monitoring access) for your Non-Prod (Npd) tenant hierarchy |

Service Account

You will be provided with a service account which has the authority to deploy resources within your tenancy. Outside of your sandbox projects, this is the only way you can deploy resources in your Google projects.

Tenant Networking

With your tenancy, you can optionally have your own VPC network in any of your projects. We refer to your tenant network as a spoke network. This spoke network will be deployed by the tenant factory. Furthermore, the spoke network will be peered to the LZiaB hub network, thus providing you with the ability to connect to on-premises resources using internal (private) IP addressing, over a highly available interconnect.

You are free to:

- Deploy resources to your own VPC networks.

- Configure cloud firewall rules within your spoke networks.

If you intend to store sensitive data on your network and require perimeter controls to limit data exfiltration through Google APIs, then VPC service controls can be enabled for your VPCs.

Google Kubernetes Engine (GKE) Clusters

- LZiaB will host regional (highly available) multitenant GKE clusters, managed by the Cloud Platform Team. If you tenancy requires any container orchestration, then this shared tenancy GKE cluster is the default hosting environment. Tenants within the cluster will be deployed to their own Kubernetes namespaces, fully isolated from each other.

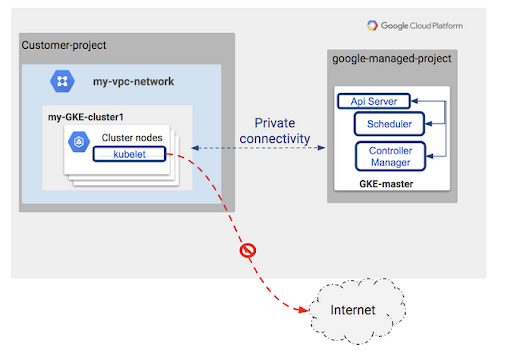

- You can optionally deploy your own private GKE cluster to any of your tenant VPCs. This may be necessary if your tenancy requires full administrative control over the full cluster. In this scenario, you will be responsible for managing your own cluster.

- All GKE clusters deployed to LZiaB will be private. This includes the control plane.

- Access to the control plane will only be possible using a bastion host deployed to the same VPC nework as the GKE cluster itself, secured using IAP.

Your GitLab and Infrastructure Code

As a new tenant, you will need to store your project’s code in GitLab. This includes all the IaC you will use to deploy resources into your LZiaB environments. Within Organisation’s GitLab, the hiearchy looks like this:

Project"] TEN --> YourTenancy(fa:fa-folder Your Tenancy) & SampleTenant(fa:fa-folder Sample Tenant) YourTenancy --> YourPrj[Some Project] & YourOtherPrj[Some Other Project] SampleTenant --> SamplePrj["Sample Tenant

Project"] end classDef grey fill:#aaaaaa,stroke:#555; classDef orange fill:#ffa500,stroke:#555; classDef darkblue fill:#21618C,stroke:#555,color:white; classDef blue fill:#2874A6,stroke:#555,color:white; classDef black fill:black,stroke:#555,color:white; classDef lightblue fill:#a3cded,stroke:#555; classDef green fill:#276551,color:white,stroke:#555,stroke-width:2px; class demo-lz-docs orange class digital,other grey class STACK lightblue class LZiaB,PLT,TEN,SampleTenant,PltPrj,SamplePrj darkblue class YourTenancy,YourPrj,YourOtherPrj black

You will need to do the following:

- Create GitLab user accounts for any users in your team/tenancy that do not yet have a GitLab user account.

- Decide who will be your GitLab tenancy subgroup owner(s).

- Submit a request to the LZiaB (GitLab) owners to create your tenancy subgroup. At this time, you will need to inform the LZiaB owners of at least one tenancy subgroup owner.

At this point, you will have the ability to create further subgroups and projects within your GitLab tenancy subgroup.

Creating More Projects

TODO: Cloud Platform Team to provide specific instructions on how a tenant creates new projects in their tenancy, using the project factory.

Accessing Your Internal Resources

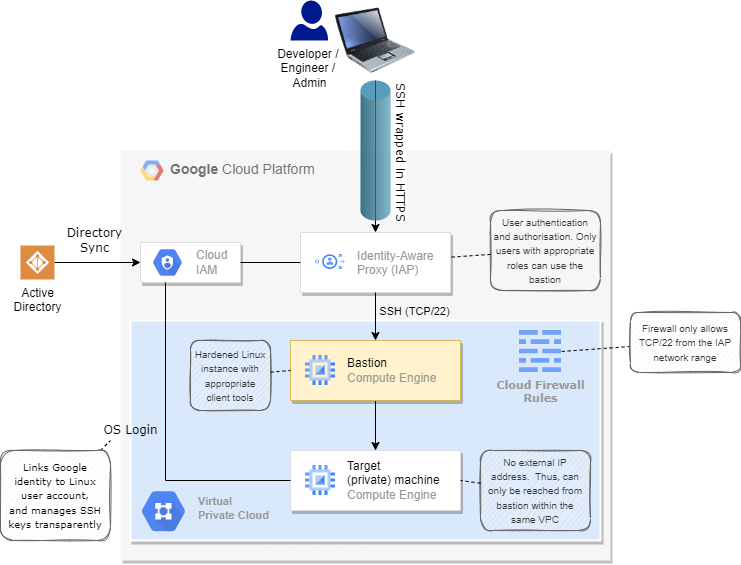

As enforced by security policy, any GCE instances or GKE clusters you deploy within LZiaB will only have internal IP addresses. Consequently, it is not possible to connect to these resources directly from any network outside of your VPC. (Which includes from the Internet.)

Consequently, if you have a need to connect to these machines (e.g. for administrative activity), you must use the bastion pattern. Within LZiaB, you have the option to deploy a bastion host within your tenancy. (Some of you may be familiar with the term jump box). This machine provides a single fortified entrypoint, accessible from outside the VPC, but only to authorised users.

How it works:

- You connect to your bastion using the SSH button in the Google Cloud Console, or from a client with the Google Cloud CLI installed:

gcloud compute ssh <target_instance> --tunnel-through-iap - Google connects to the Identity Aware Proxy, which will prompt for authentication if your user ID is currently unauthenticated.

- Google then checks the user against roles assigned. If the user has appropriate roles, the user is connected to the bastion host.

- From this bastion host, the user can connect to private resources in their VPC.

Useful links: